Yen-Cheng Liu

I am a research scientist at Meta GenAI. My research interests span the areas of computer vision and machine learning. I am currently exploring media generation, with a particular focus on video and image generation. One of my recent projects is Meta Movie Gen, a cast of sophisticated foundation models designed for media generation (video, image, and audio).

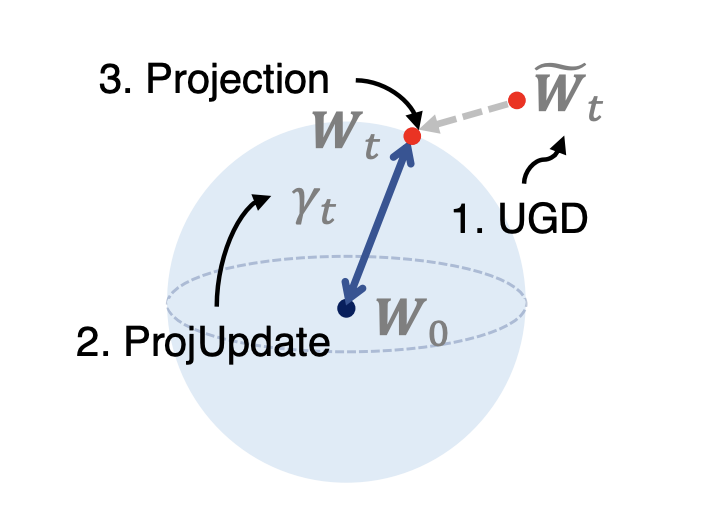

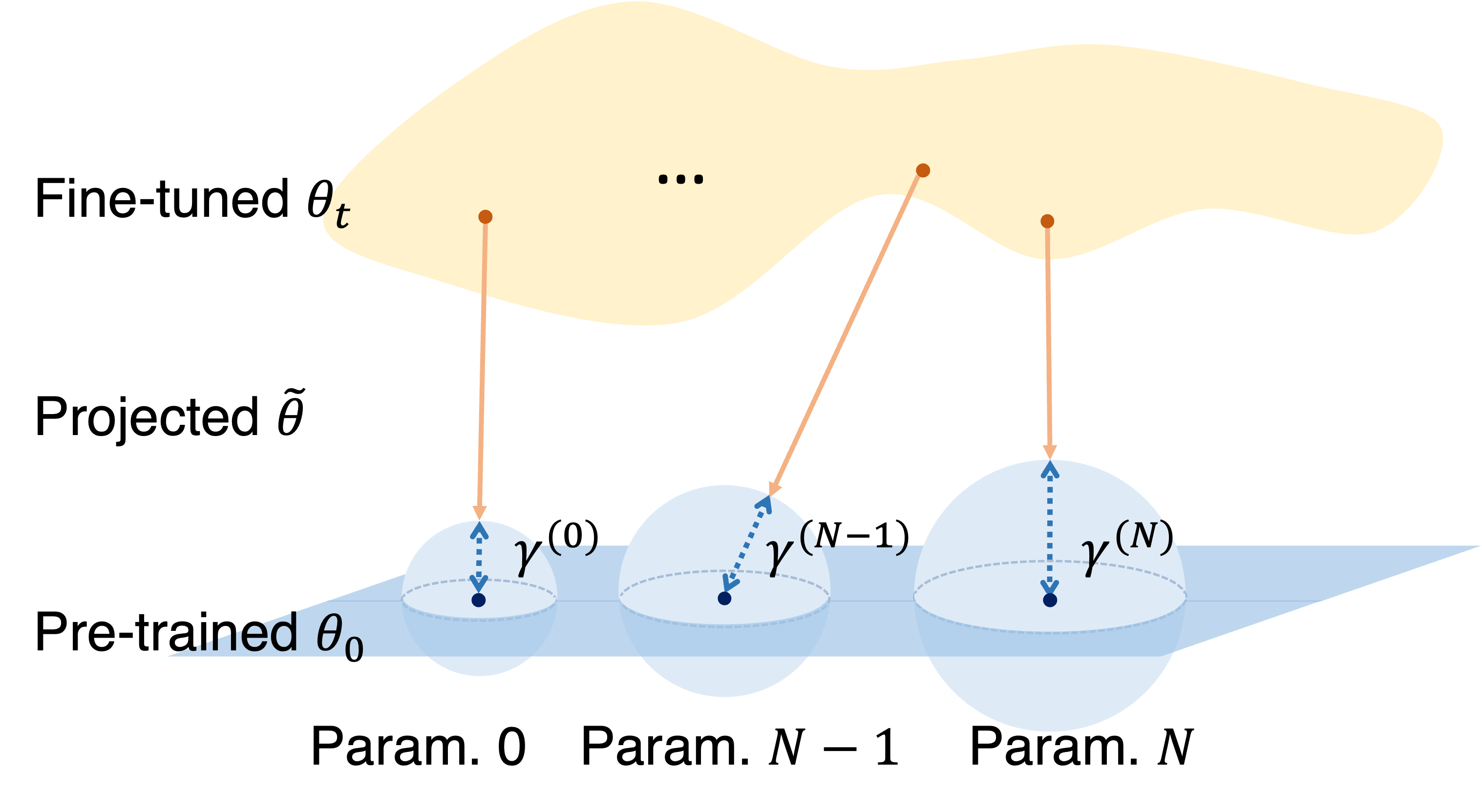

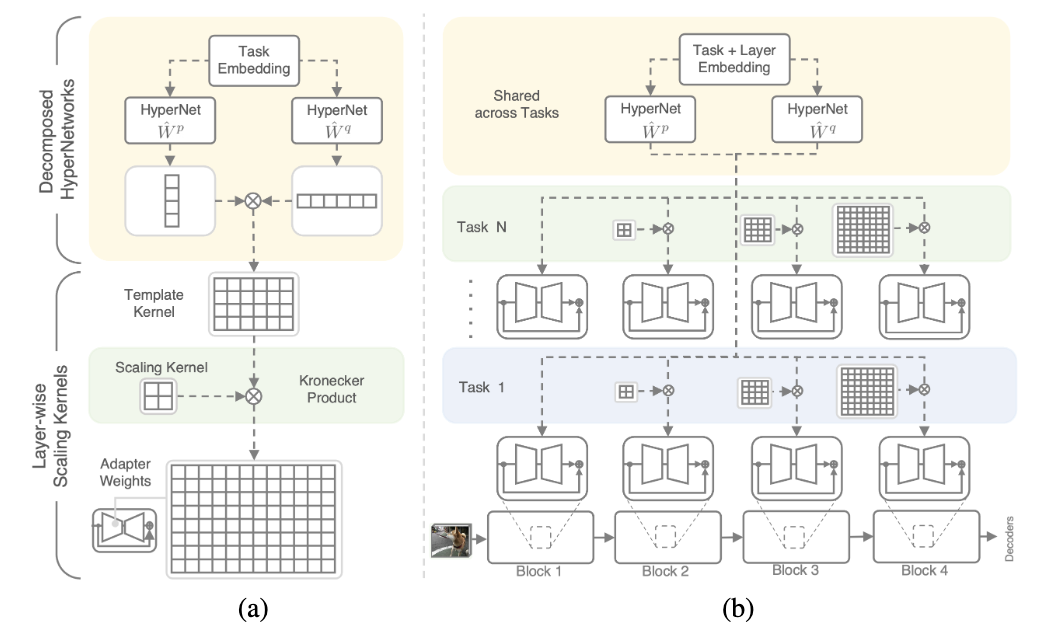

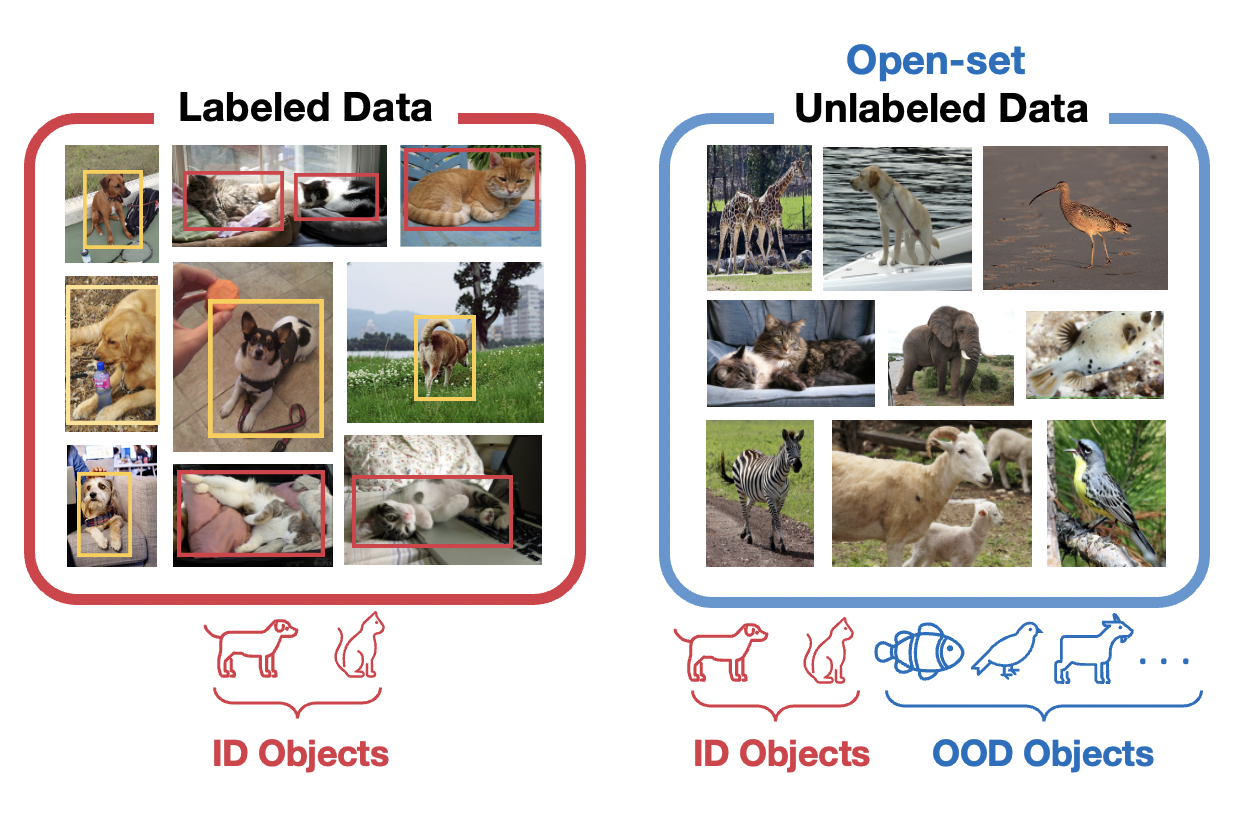

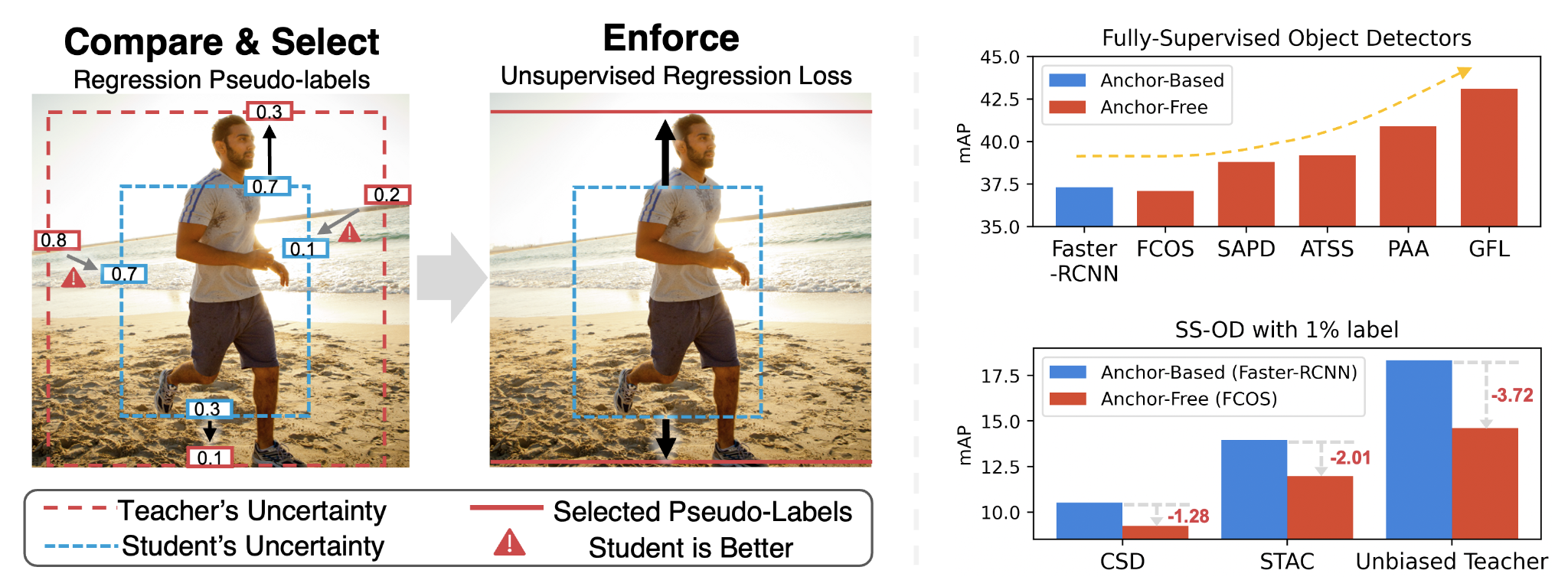

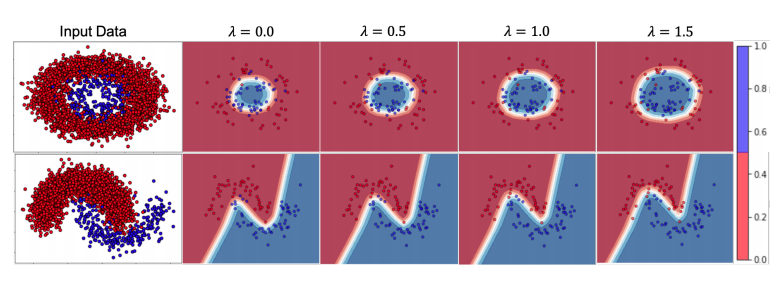

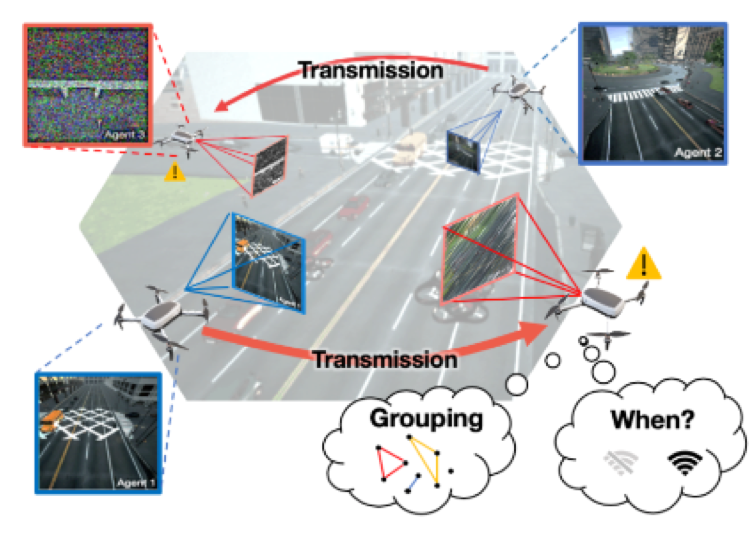

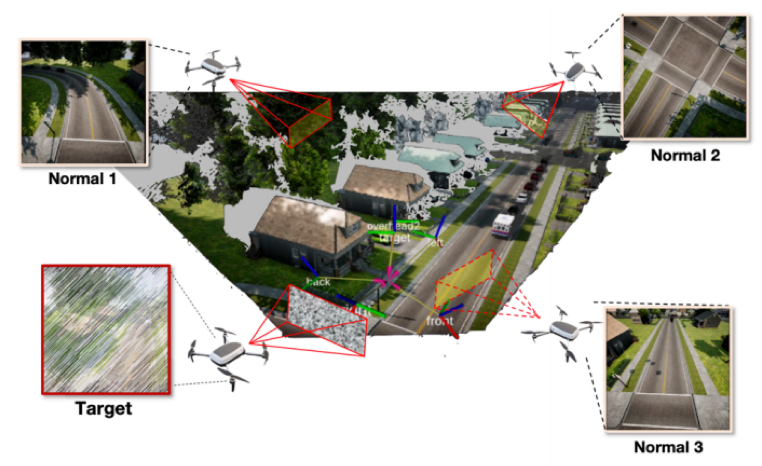

Before this, I earned my PhD from Georgia Tech, where I had the opportunity to work with Prof. Zsolt Kira. My PhD research centers on enhancing the efficiency of model parameters, data, and label annotations in the training of large-scale machine learning models, which rely on substantial foundation models trained on vast datasets and labels.

[CV] [Google Scholar]