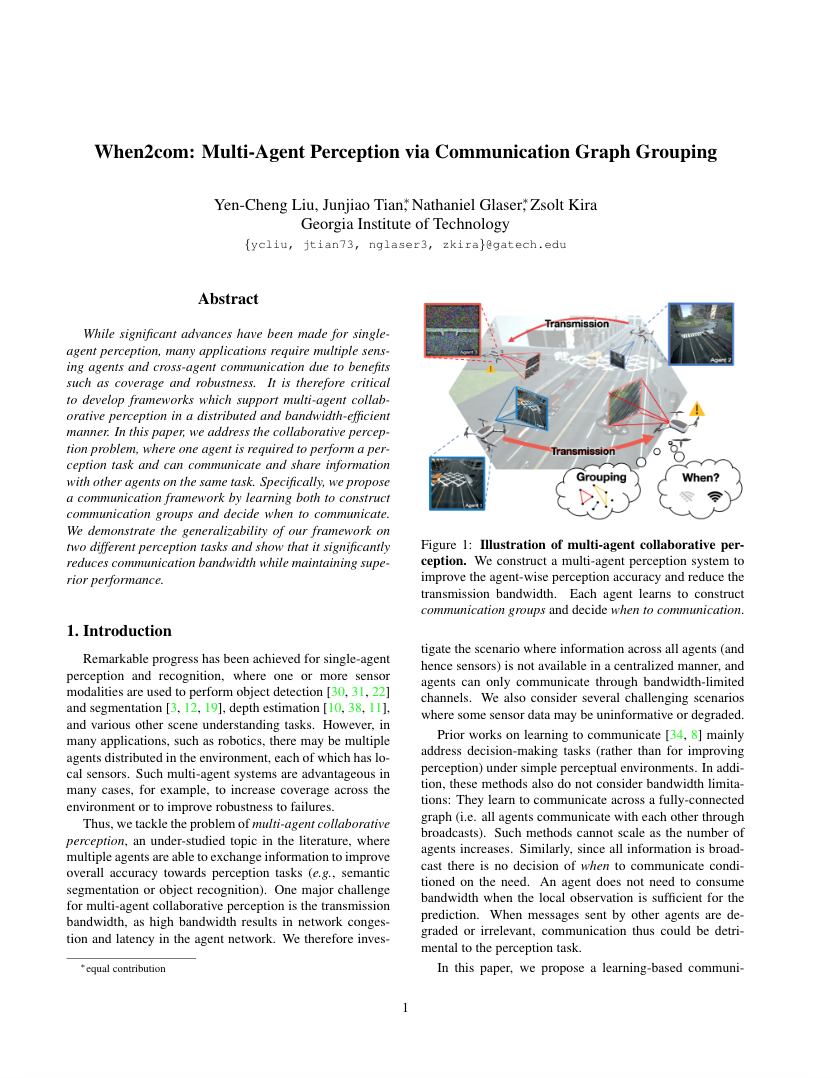

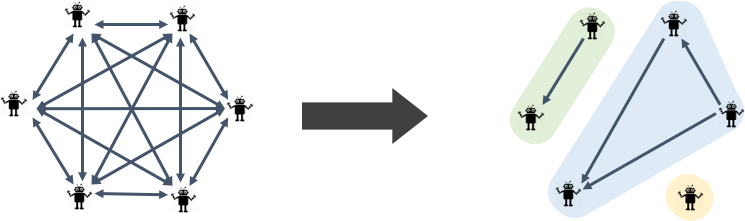

Figure: Multi-Agent Perception via Communication.

While significant advances have been made for single-agent perception (i.e., computer vision tasks from a single perspective), single-agent perception suffers from occlusion and sensor degradation. This motivates us to develop a multi-agent perception system, where one agent improves perception accuracy by communicating and sharing information with other agents.

Bandwidth usage: Each agent is required to transmit encoded visual data (high-dimensional feature maps) to other agents, and high bandwidth usage may result in network congestion and latency issues. Therefore, an efficient multi-agent perception system should be bandwidth-limited.

Tradeoff between bandwidth and accuracy: Transmission bandwidth limitation might decrease the perception accurcay improvement.

Figure: A fully-connected communication network consumes high transmission bandwidth.

Learnable 3-way handshake communication:

-

Request: The degraded agent sends compact query to normal agents.

-

Match: Normal agents compute and return the matching scores.

-

Connect: Based on the matching scores, the degraded agent decide who to communicate with.

Therefore, with the assumption of known degraded agent(s), our model learns who to commmunicate with [1].

Figure: Learnable 3-way handshaking communication.

However, an ideal communication mechanism is to switch on transmission when the agent requires information from other agents to improve its perception ability, while it should also switch off the transmission when it has sufficient information for its own perception task. Thus, with the assumption of unknown degraded agents, our model applies self-attention to learn when to communicate [2].

Figure: When2com model.

Reducing network complexity: As mentioned above, using the fully-connect communication network results in high bandwidth usage. We thus prune the less important links according to edge weights (i.e., matching scores).

Figure: Network complexity reduction.

Figure: Visualization of our collected datasets.

[1] "Who2com: Collaborative Perception via Learnable Handshake Communication", Yen-Cheng Liu, Junjiao Tian, Chih-Yao Ma, Nathaniel Glaser, Chia-Wen Kuo, Zsolt Kira, ICRA 2020

[2] "When2com: Multi-Agent Perception via Communication Graph Grouping", Yen-Cheng Liu, Junjiao Tian*, Nathaniel Glaser*, Zsolt Kira, CVPR 2020 (* equal contribution)

This work was supported by ONR grant N00014-18-1-2829.