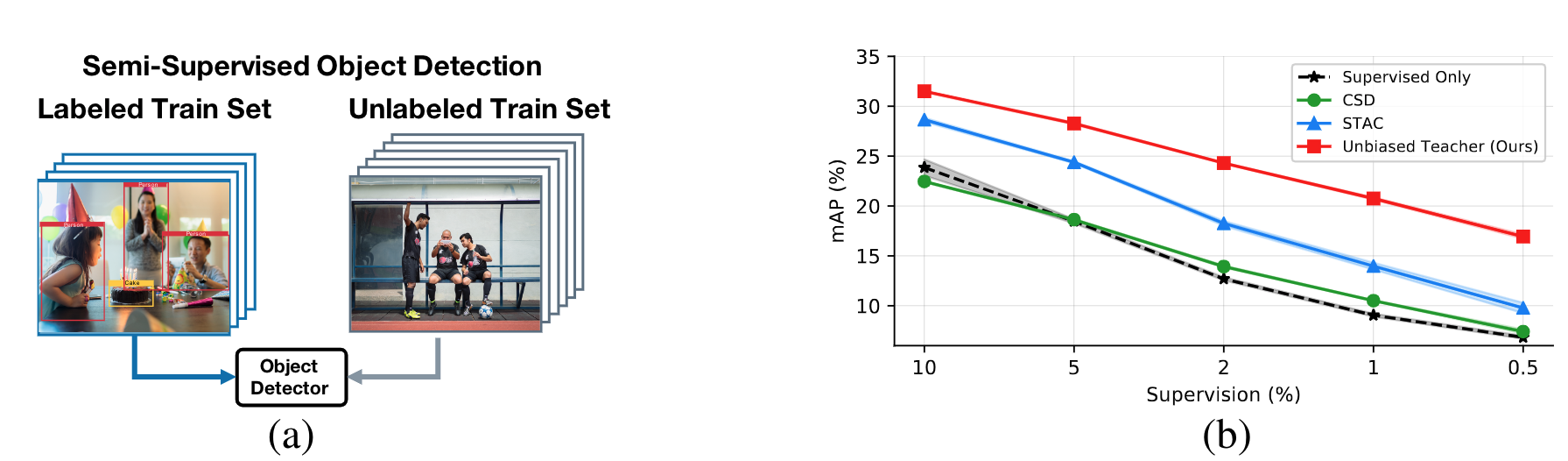

Figure: (a) Illustration of semi-supervised object detection, where the model observes a set of labeled data and a set of unlabeled data in the training stage. (b) Our proposed model can efficiently leverage the unlabeled data (i.e., label supervision from 0.5% to 10%) and perform favorably against the existing semi-supervised object detection works.

The availability of large-scale datasets and computational resources has allowed deep neural networks to achieve strong performance on a wide variety of tasks. However, training these networks requires a large number of labeled examples that are expensive to annotate and acquire. As an alternative, Semi-Supervised Learning methods have received growing attention. Yet, these advances have primarily focused on image classification, rather than object detection where bounding box annotations require more effort.

A straightforward way to address Semi-Supervised Object Detection (SS-OD) is to adapt from existing semi-supervised image classification methods (e.g., FixMatch). Unfortunately, the nature of class-imbalance in object detection tasks impedes the usage of pseudo-labeling. Also, object detectors are far more complicated than image classifers in terms of model architectures.

Figure: Illustration of our proposed Unbiased Teacher.

To overcome the above issues, we propose a general framework – Unbiased Teacher: an approach that jointly trains a Student and a slowly progressing Teacher in a mutually-beneficial manner, in which the Teacher generates pseudo-labels to train the Student, and the Student gradually updates theTeacher via Exponential Moving Average (EMA), while the Teacher and Student are given different augmented input images (see the above Figure). To understand more details about our method, we encourage the readers to take a closer look at our paper.